Analyzing satellite image collections

on public cloud platforms with R

CONAE spring school 2022

Marius Appel

Sept. 27, 2022

Motivation

Data availability (e.g. Sentinel-2) in the cloud

Method availability (e.g. in R, > 18k CRAN packages)

Who wants to download > 100 GB from data portals?

Tutorial overview

Objective: Show how you can analyze satellite image collections in the cloud with R

- Introduction:

- Cloud computing

- Satellite imagery in the cloud

- Cloud-native geospatial echnologies

- R ecosystem

- Live examples

- Creating composite images

- Complex time series analysis

- Extraction from data cubes

- Discussion

All materials are available on GitHub: https://github.com/appelmar/CONAE_2022.

1. The cloud

“… in the cloud”

Services:

Infrastructure providers:

- Amazon web services (AWS)

- Google Cloud Platform

- Microsoft Azure

- …

Somewhere in between: Microsoft Planetary Computer

In this tutorial, we will use a custom machine on AWS to analyze satellite image collections in the cloud.

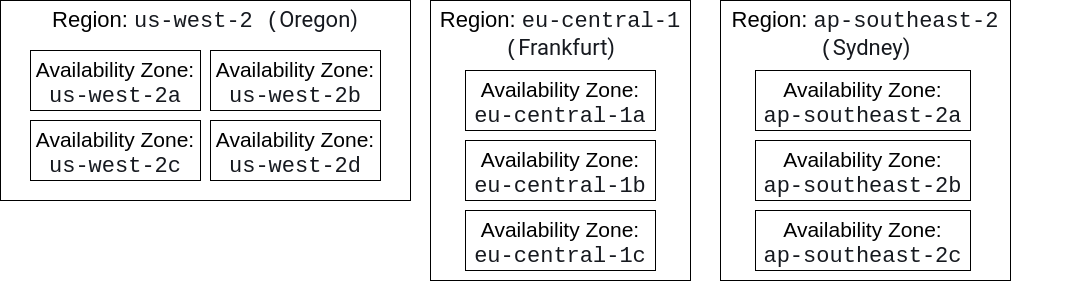

Cloud infrastructure (AWS)

- Lots of separate data centers with large clusters

- In total: > 25 regions and > 80 availability zones

- Basic service to run (virtual) machines: EC2 (Amazon Elastic Compute Cloud)

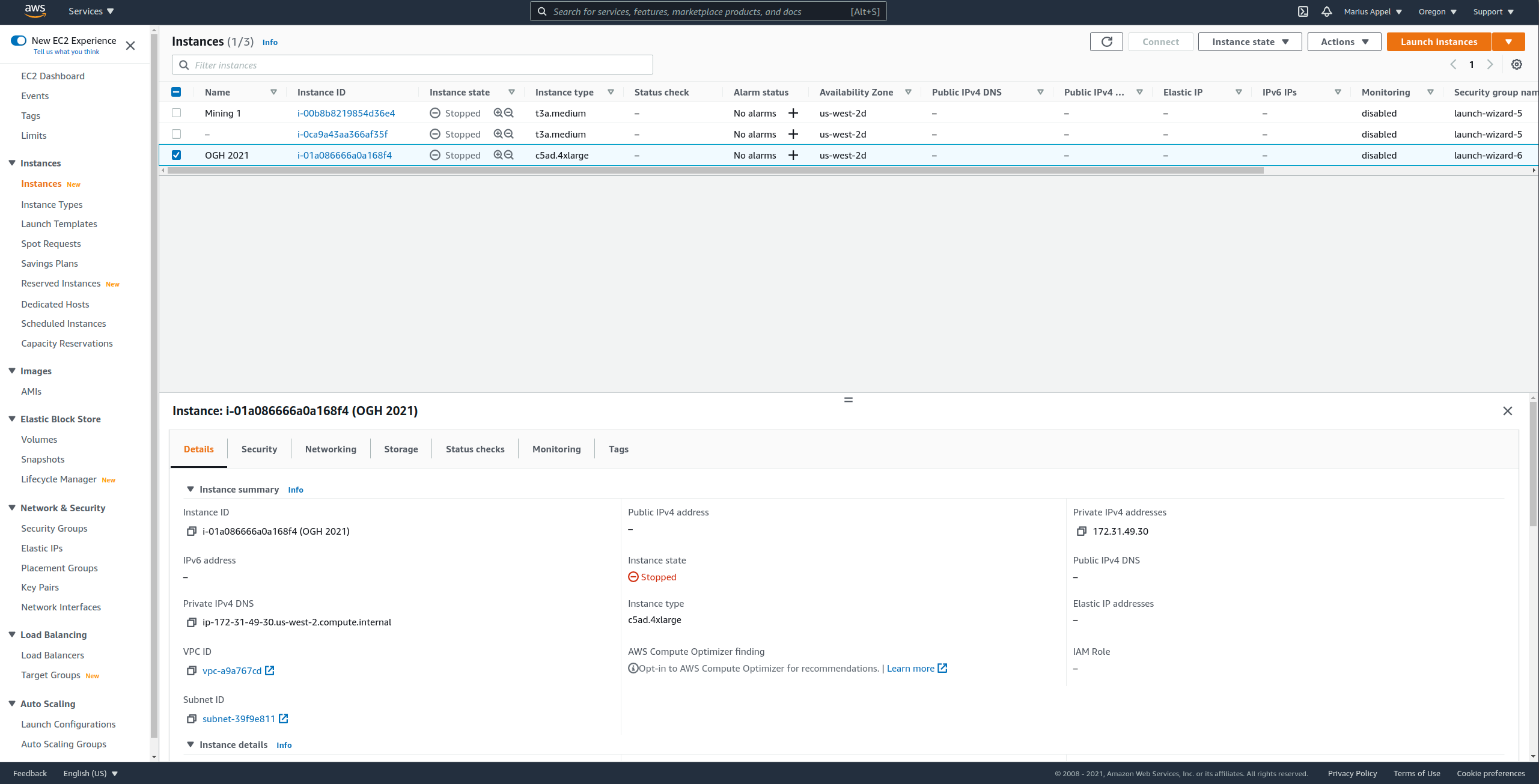

Running a machine in the cloud (AWS)

Select a region and machine instance type, based on costs, hardware, and OS

Create a key pair for accessing the machine over SSH

Click “Launch instance” and follow instructions

Connect via SSH and install software (PROJ, GDAL, R, RStudioServer1, R packages, …)

Notice that security considerations (e.g. by using IAM roles, multi-factor authorization) are NOT part of this tutorial.

AWS Management Console

2. Satellite imagery on cloud platforms

Example platforms and available data

| Provider | Data |

|---|---|

| Amazon web services (AWS) | Sentinel, Landsat, ERA 5, OSM, CMIP 6, and more, see here |

| Google Cloud Platform | Landsat, Sentinel, access to GEE data |

| Microsoft Planetary Computer | Sentinel, Landsat, MODIS and more, see here |

Object Storage: S3

EC2 machines have local storage (EBS) but big data archives use highly scalable object storage.

S3 elements:

- Bucket: container for objects that are stored in a specific AWS region

- Objects: Individual files and corresponding metadata within a bucket, identified by a unique key

- Key: Filenames / Path or similar; unique within a bucket

Pricing (storage, transfer, requests):

- Bucket owner pays by default

- For requester pays buckets, transfer and requests are paid by users

S3 examples

Buckets:

Object:

Data access

- Buckets are not a drive on your machine

- Data access over HTTP requests (PUT, GET, DELETE, …)

Challenges

How to find images by location, time, and other criteria?

How to efficiently read image data from S3 without copying images to our machine storage first?

3. Cloud-native geospatial: STAC, COGs, and data cubes

STAC overview

Standardized JSON-based language for describing catalogs of spatiotemporal data (imagery, point clouds, SAR)

Extensible (available extensions include EO, Data Cubes, Point Clouds, and more)

1.0.0 release available since May 2021

Growing ecosystem

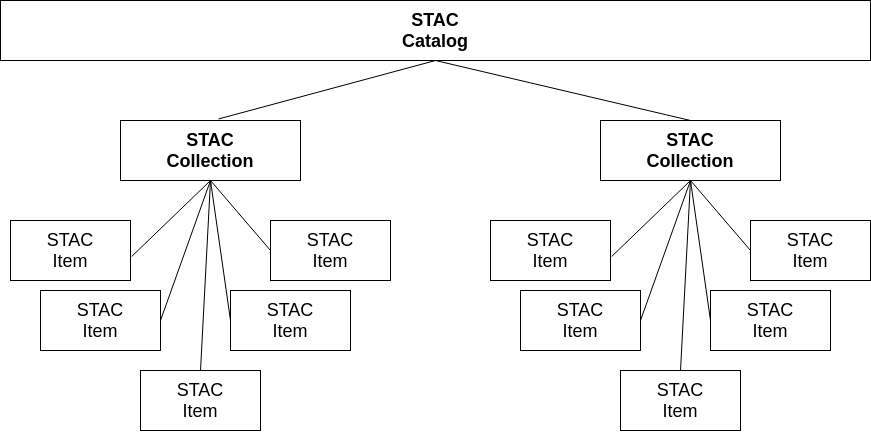

STAC specification

- Items are inseparable objects of data (assets) and metadata (e.g. a single satellite image)

- Catalogs can be nested

- Collections extend catalogs and can be used to group items and their metadata (e.g. license)

STAC API

Static STAC catalogs

- Typically set of linked JSON files, starting with a

catalog.json - Catalog JSON contains links to collections, nested catalogs, or items

- Items contain assets (links to files) and metadata

- Problem: All items must be processed for searching

- Example: https://meeo-s5p.s3.amazonaws.com/catalog.json

STAC API

- Web-service for dynamic search of STAC items by area of interest, datetime, and other metadata

- Compliant with OGC API - Features standard

STAC Index

- A good starting point to find available STAC collections and API services: https://stacindex.org

Cloud-optimized GeoTIFF (COG)

Image file formats must be cloud-friendly to reduce transfer times and costs associated with transfer and requests

COG = Normal tiled GeoTIFF files whose content follows a specific order of data and metadata (see full spec here)

support compression

support efficient HTTP range requests, i.e. partial reading of images (blocks, and overviews) over cloud storage

may contain overview images (image pyramids)

GDAL can efficiently read and write COGs, and access object storage in the cloud with virtual file systems

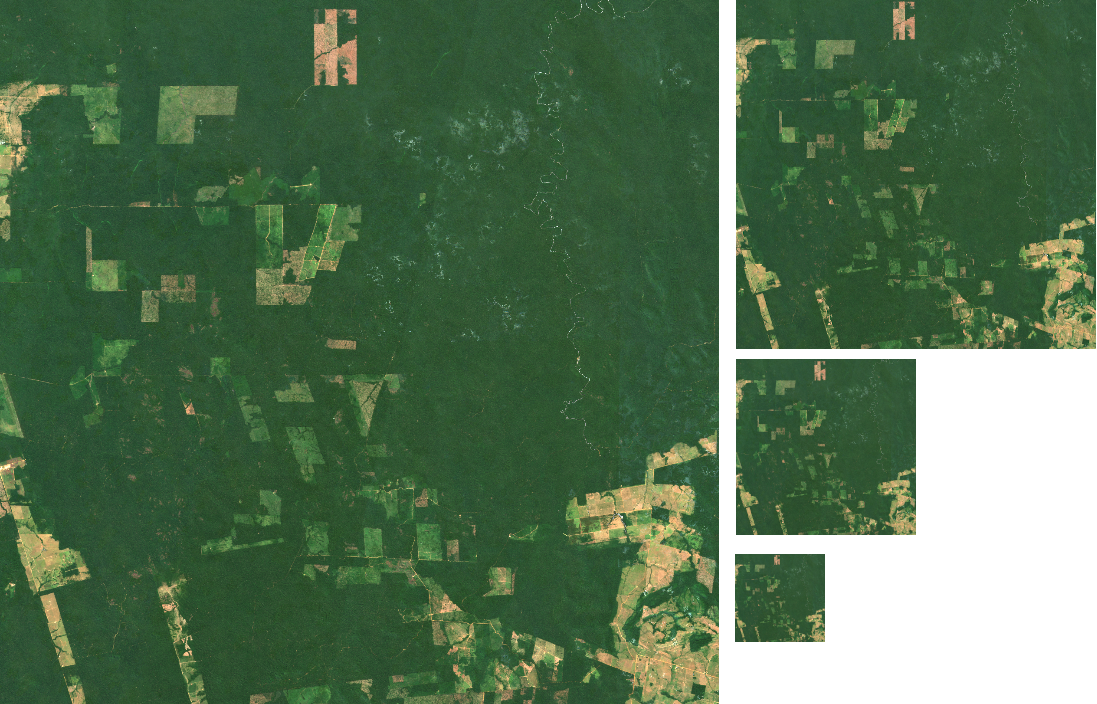

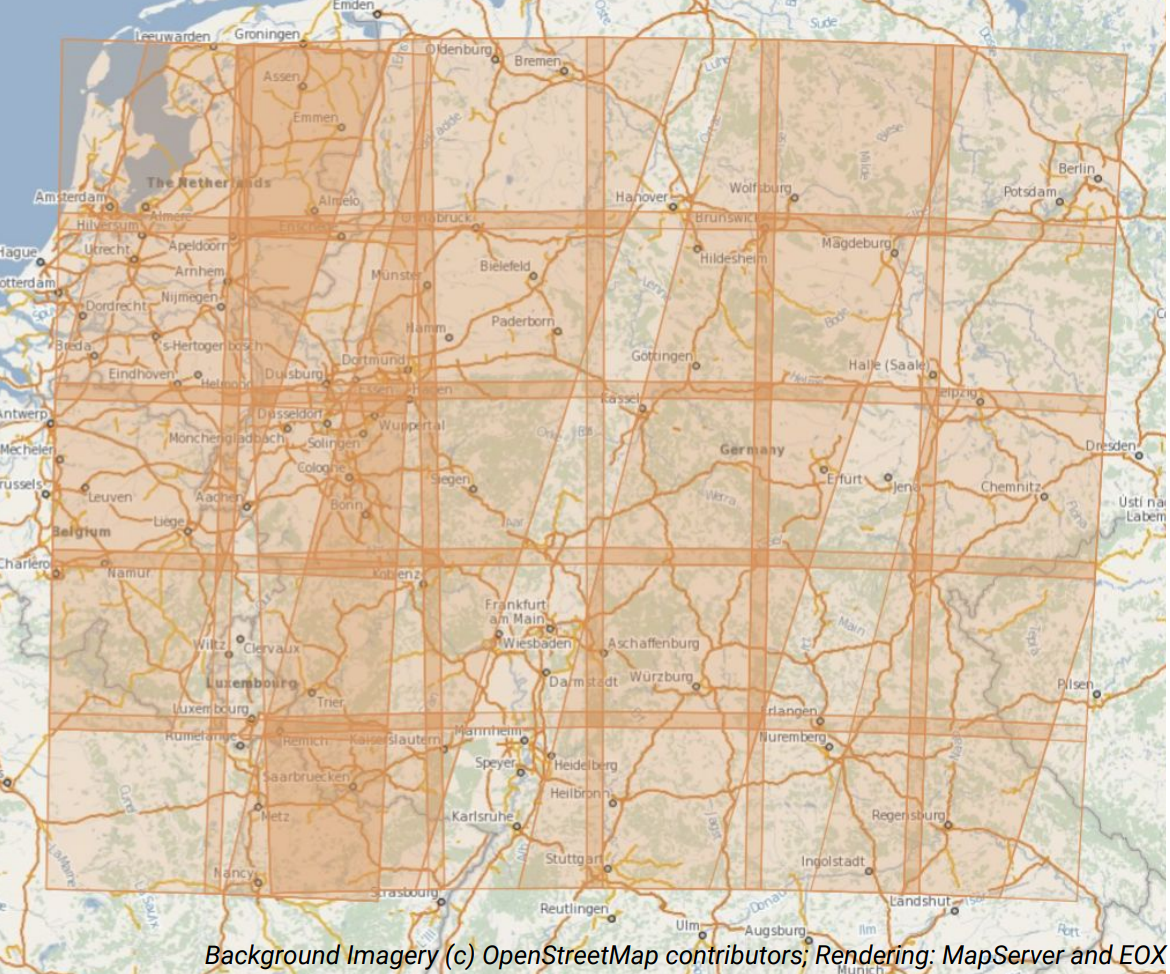

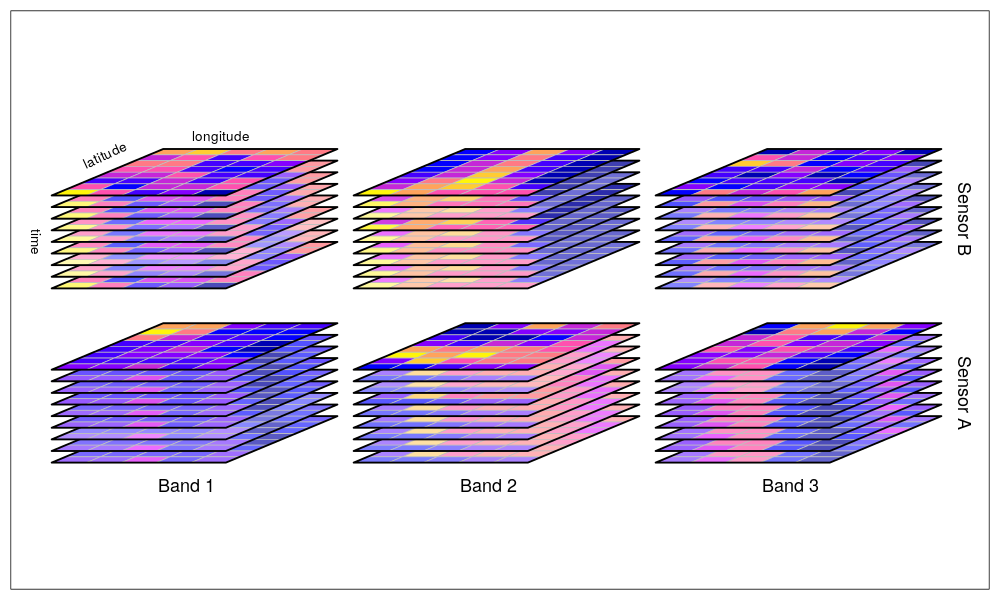

Satellite image collections

Images spatially overlap, have different coordinate reference systems, have different pixel sizes depending on spectral bands, yield irregular time series for larger areas

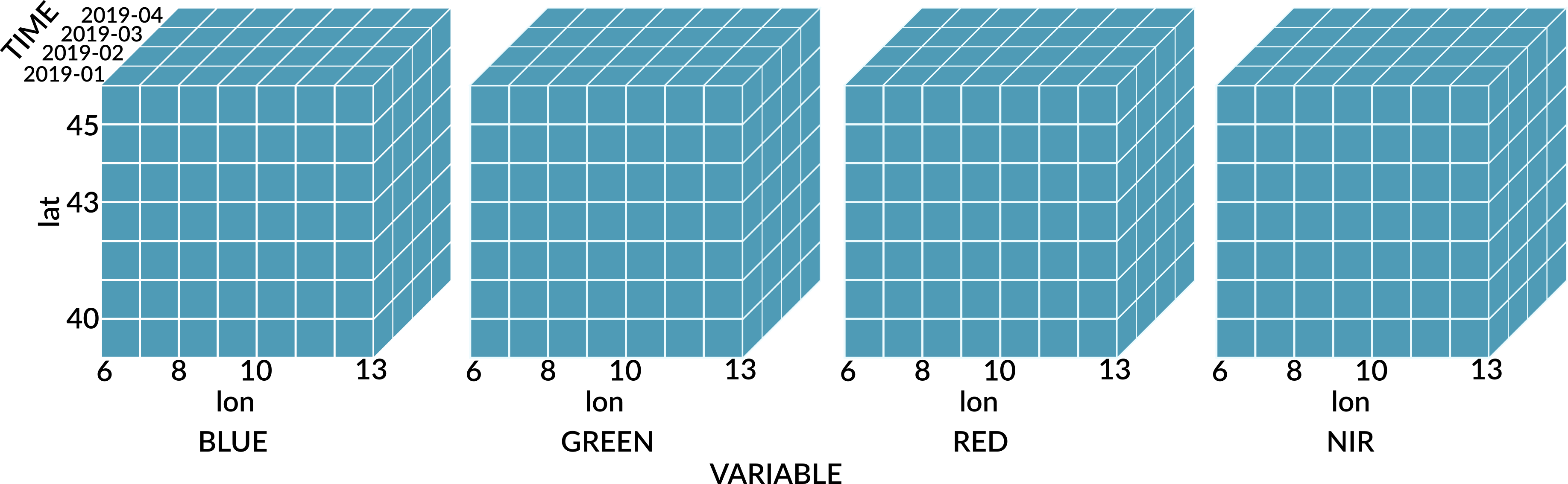

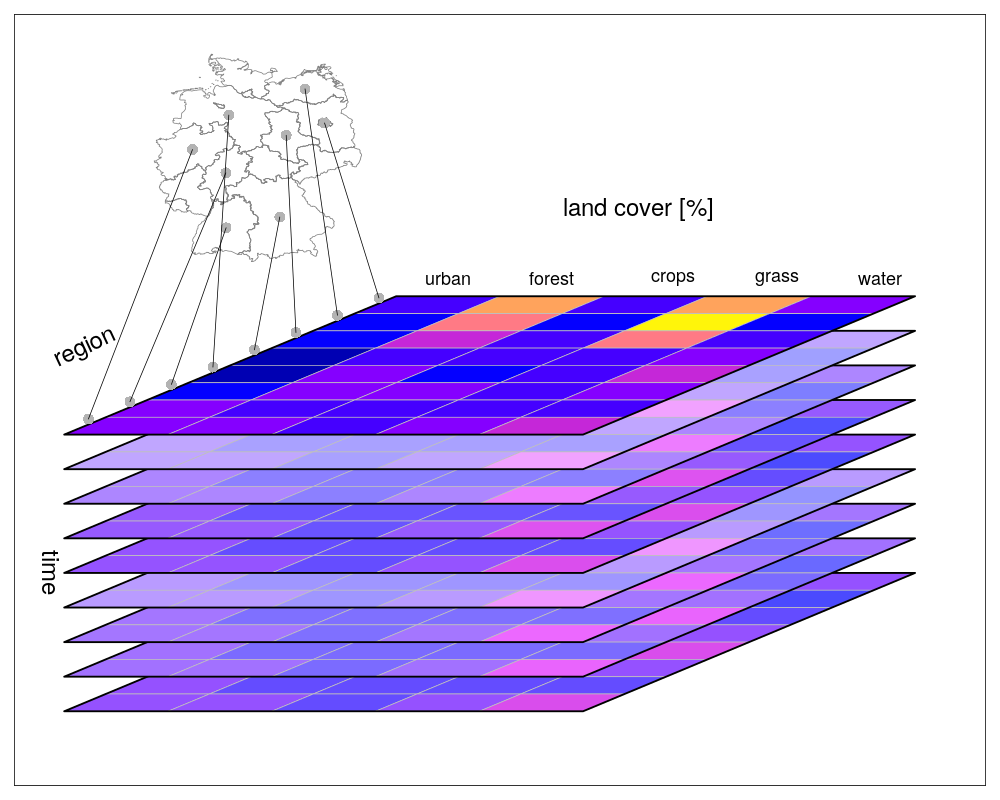

What is a data cube?

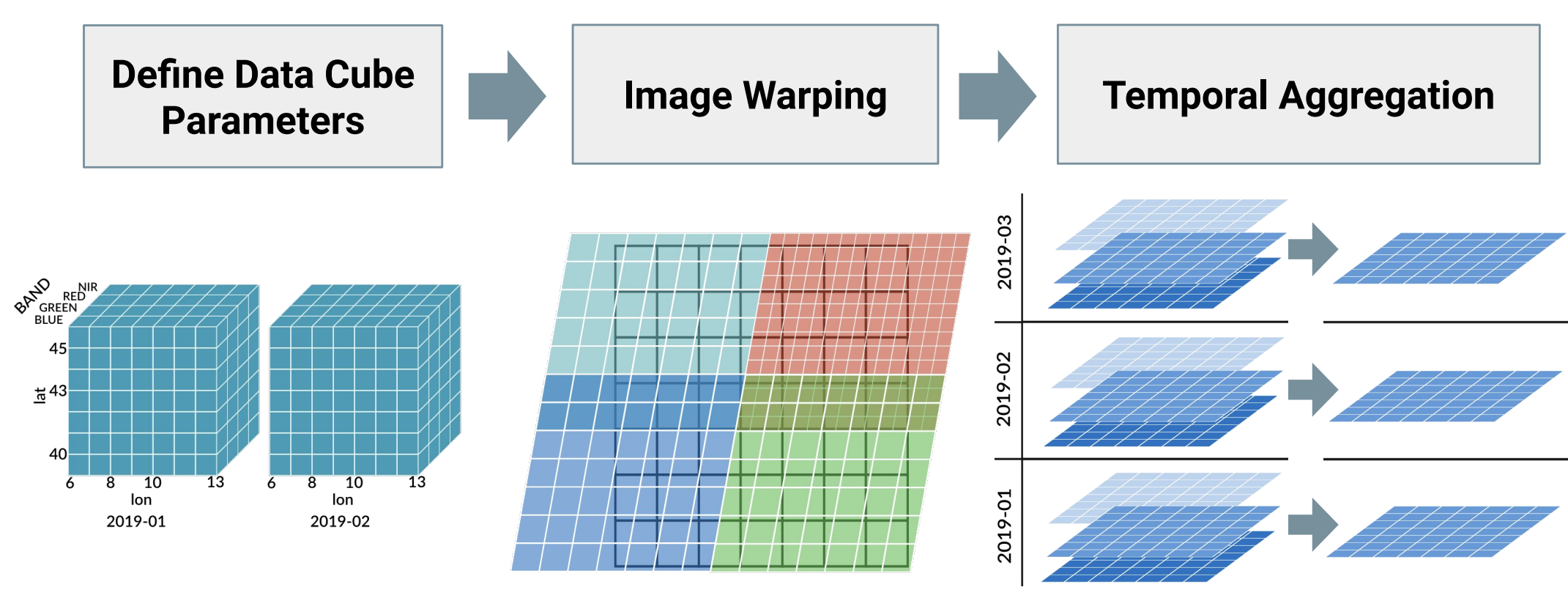

Here: A four-dimensional (space, time, variable / band) regular raster data cube

- collect all observations in one object

- \(b \times t \times y \times x \rightarrow\) number

- single CRS, cells have constant temporal duration, and spatial size

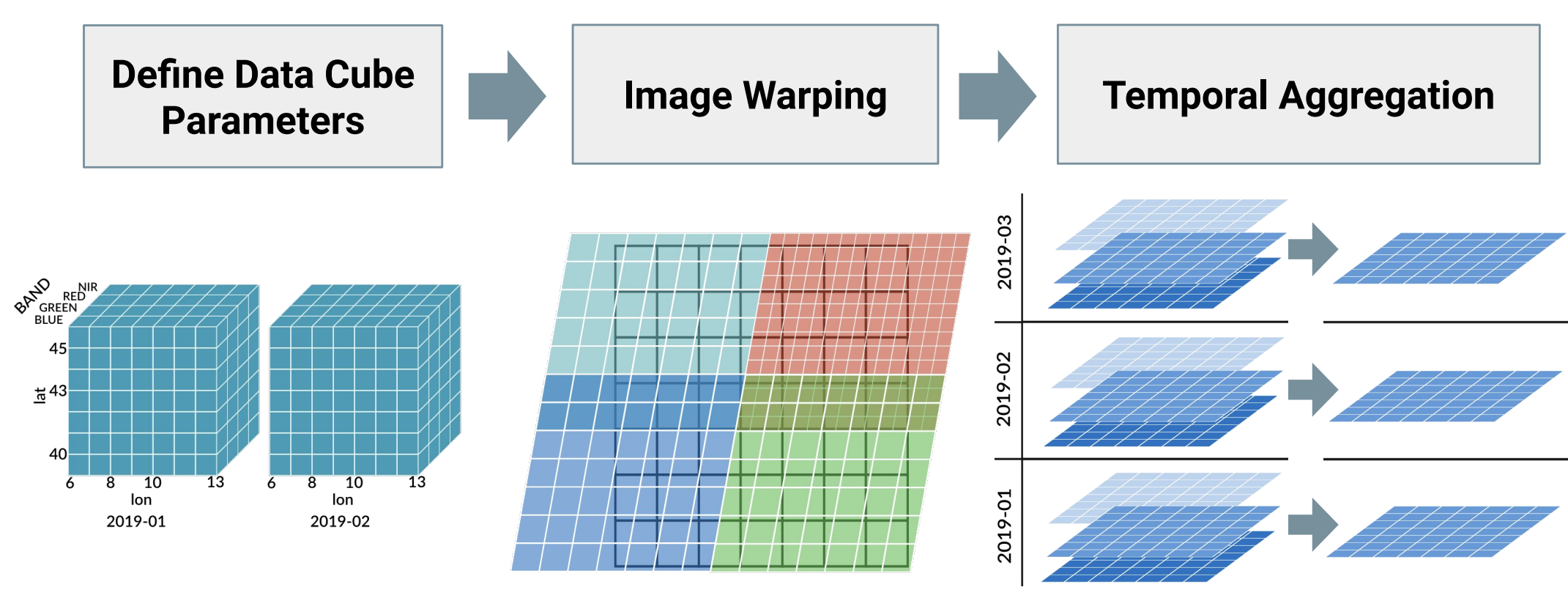

Data Cube creation is lossy

Important: There is no single correct data cube!

Important: There is no single correct data cube!

4. R ecosystem for analyzing satellite imagery (in the cloud)

R packages

- General packages for raster data analysis

- terra (Hijmans 2020) is a newer (faster) package that replaces raster (Hijmans 2019)

- Support two- or three-dimensional rasters

- Include lots of analysis tools

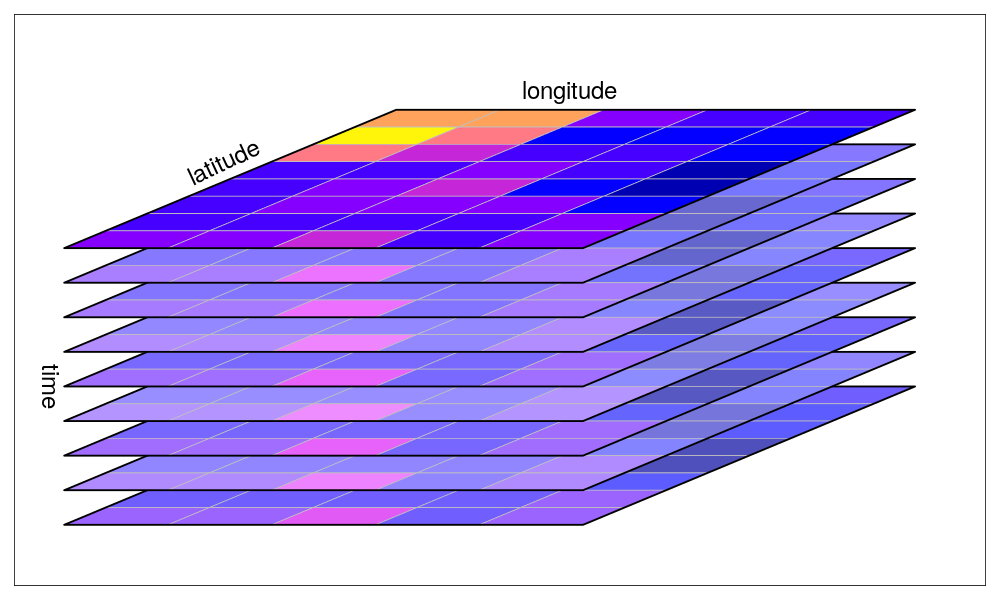

- Flexible package for spatiotemporal arrays / data cubes with arbitrary number of dimensions (Pebesma 2019)

- Supports raster and vector data cubes

Imagery from https://r-spatial.github.io/stars

Creation and processing of four-dimensional (space, time, variable) data cubes from irregular image collections (Appel and Pebesma 2019)

Parallel chunk-wise processing

Documentation available at https://gdalcubes.github.io/

- Generic package for satellite image time series analysis (Simoes et al. 2021)

- Builds on top of previous packages

- Includes sophisticated methods with a focus on time series classification

- Documentation: https://e-sensing.github.io/sitsbook/

Imagery from https://github.com/e-sensing/sits

- rstac (Brazil Data Cube Team 2021): Query images from STAC-API services

- sp (Pebesma and Bivand 2005): replaced by sf and stars

- openeo (Lahn 2021): Connect to and analyse data at openEO backends

This tutorial focuses on the packages rstac and gdalcubes.

Live examples

Discussion

Discussion

Advantages

Access to huge data archives

Flexibility: You can do whatever you can do on your local machine

Powerful machines available

Open source software only

Disadvantages

Not free

GEE and others can be easier to use (some are free)

Your institution’s computing center might have more computing resources (for free)

Setup and familiarization needed

Depends on the existence of STAC-API services and imagery as COGs!

→ Which tools / platforms / environments are most efficient to use highly depends on factors like data volume, computational effort, data & method availability, effort needed to familiarization and reimplementation, and others.

Summary

Cloud-computing platforms contain lots of satellite data

Cloud storage differs from local storage

Technology and tools:

STAC (and STAC API!) for efficient and standardized search of spatiotemporal EO data

COGs allow efficiently reading parts of imagery, potentially on lower resolution

GDAL has everything for efficient data access on cloud storage

gdalcubes makes the creation and processing of data cubes from satellite image collections in R easier

Thanks!

Slides and notebooks:

https://github.com/appelmar/CONAE_2022

Contact: